CROSS-REFERENCE TO RELATED APPLICATIONS

[0001]The present application claims priority to Dutch Patent Application NL 1044409 filed on Aug. 30, 2022, the contents of which are incorporated by reference in its entirety.

TECHNICAL FIELD

[0002]The present disclosure relates to data processing performed and implemented in an information processing device, such as but not limited to a computer, a computing device, a virtual machine, a server, or a plurality of cooperatively operating computers, computing devices, virtual machines, or servers. In particular, the present disclosure relates to a method for providing and/or improving artificial intelligence by an information processing device, involving a data processing algorithm enabling artificial intelligence. More specifically, the present disclosure relates to acquiring, aligning, creating, generating, deploying, augmenting and modifying artificial intelligence, by an information processing device, from processing context-related, and interrelated operational data and human participation data.

BACKGROUND

[0003]In general, Artificial Intelligence, AI, technology comprises the use and development of computer systems that can learn and adapt to perform tasks without being explicitly programmed, by using algorithms and statistical models.

[0004]AI technology is already widely integrated in today's modern society. Data processing systems using AI technology have proven to handle and complete rather complex tasks commonly associated with human beings with more precision and in less time than would be possible for humans.

[0005]Learning or training is one of the fundamental building blocks of AI technology. From a conceptual standpoint, learning is a process that acquires or imparts and/or improves the knowledge of a data processing algorithm enabling artificial intelligence by making observations about its environment or context. From a technical standpoint, AI learning, specifically supervised AI learning, comprises processing of a set of input-output pairs for a specific function for predicting the outputs of new inputs.

[0006]One category of AI systems presently available are so-called ‘task intelligent systems’, designed to automatically perform singular tasks, focused on highly specific technical domains, such as object recognition, facial recognition, speech recognition, game playing, autonomous vehicle operation, product recommendation, or internet searching. This category of AI systems is generally referred to as Artificial Narrow Intelligence, ANI, or weak AI.

[0007]Weak or narrow data processing algorithms enabling artificial intelligence are generally trained through many examples or iterations of their respective task, receiving performance feedback from an evaluation function, reward function, loss function, error function, etc. in order to reinforce or otherwise learn beneficial behavior and/or unlearn or disincentivize actions that do not lead to beneficial behavior or intended outcomes within the given context.

[0008]Reinforcement Learning, RL, is a machine learning technique that allows data processing algorithms or agents enabling artificial intelligence to learn by interacting with an environment and receiving feedback in the form of rewards or penalties for their actions. By exploring different approaches and evaluating their success, RL enables agents to improve by learning or discovering effective strategies for solving complex problems. In particular, Deep Reinforcement Learning, DRL, has emerged as a powerful approach for learning optimal policies for actions for AI systems. By combining Deep Learning, DL, which allows for agents to be efficiently trained on large datasets, and RL which enables agents to learn from experience, DRL allows agents to learn complex behaviors and make decisions in intricate environments.

[0009]The need for an evaluation function is why many current AI applications are games such as chess or other games, where the final game outcome or continuous game score can serve to evaluate in-game behaviors. Furthermore, games represent a context of limited dimensionality in which the dependencies between AI actions, context parameters, and game outcome or score can be successfully mapped. The game speed can be increased arbitrarily to allow for more training iterations per unit of time. The DRL approach, for example, was noticeably demonstrated by employing it for training agents to play several Atari™ and Nintendo™ games.

[0010]However, more scenarios, handling multiple, versatile and/or non-predefined tasks, do not have an objective evaluation function like a game score, and cannot arbitrarily be sped up. To interpret actions in more scenarios in terms of goal achievement, benefit, or appropriateness within the current context or a larger strategy, a human is needed to perform that interpretation. Humans can distill relevant dimensions or key factors from situations and evaluate the facts or the events that are taking place accordingly.

[0011]Experiments have been performed to impart human intelligence data into the processing of a task intelligent AI system, by human evaluation of a task performed or proposed by the system, such as a game.

[0012]Human observers may, for example, monitor an AI data processing algorithm traversing a space performing various actions, and explicitly communicate whether or not each change in movement direction or each chosen action is conducive to the goal. In this way, by the active feedback or guidance provided by the human user, the system successively learns the best possible policy or policies to perform a respective task.

[0013]Similarly, there are other instances where human intelligence is still explicitly required or otherwise beneficial in the process of training an AI. For example, a human's general or specific understanding of the larger context, including the temporal evolution of events in a scene, may be needed to properly identify relevant objects or events, to correct automatic decisions made by the AI, or to resolve ambiguity.

[0014]This has led to AI training or learning scenarios where human observers press buttons or provide spoken input or otherwise perform explicit ratings to indicate what is appropriate/inappropriate behavior, to manually provide labels, or otherwise to generate additional input by explicitly generating descriptors for concrete pieces of data such as images, videos, or audio to train a data processing algorithm enabling artificial intelligence operated by an information processing device.

[0015]ANI has in recent years reached human-level or even greater performance in specific, well-defined tasks through advances in Machine Learning, ML, and deep learning in particular, by processing larger amounts of operational or contextual data, among which environmental data, information data, measurement data, control data and state data of a plurality of devices operating in a particular well-defined context or environment. Especially when such data is additionally combined or otherwise associated with data provided based on human intelligence, such as labels, descriptors, or judgements, can an algorithm enabling artificial intelligence effectively achieve or even surpass human-level performance.

[0016]Importantly, however, while human experts may perform or handle a complex task or operation in a versatile and/or well-defined context or environment, they may not always be able to explicitly indicate how they perform the task or operation and on the basis of which contextual parameters or variables their interpretation and evaluation of the task or operation is performed, i.e. how a decision or result is reached. Therefore, the human-generated labels, descriptors, or judgements that ANI relies on for its own artificial intelligence may not be available for all tasks.

[0017]Non-limiting examples of such complex tasks or complex operations can be found in the field of healthcare diagnosis and troubleshooting, logistics planning and scheduling, financial decision making, driving a car on the road, et cetera.

[0018]For other tasks, labels, descriptors, or judgements can be produced to some extent by humans, but their production may be limited in scope and difficult to produce at the scale, complexity, and speed needed to keep up with current developments in artificial intelligence research.

[0019]The domain of Natural Language Processing, NLP, is at present the direction in which AI technology has made its most tremendous and important progress. Large Language Models, LLM, such as OpenAI's GPT™ series, have recently achieved impressive results in various NLP tasks, such as language generation, translation, and comprehension. Currently, the most successful LLMs are built using very large Transformer architectures—a Transformer is a type of neural network architecture that is based on the concept of self-attention, which allows the model to weigh the importance of different parts of the input sequence, as well as how they relate to one another—and are trained using both supervised and unsupervised training schemes on vast amounts of text data. By exposing the model to a large amount of text data, patterns and relationships between words and sentences are learned thus enabling optimal generation of output sequences.

[0020]For example, GPT-3 was trained on a massive dataset of over 570 GB of text data, encompassing books, articles, and websites. The model consists of 175 billion parameters, making it one of the largest language models ever developed. The resulting model is capable of generating coherent and realistic language, answering questions, and even performing translating text from one language to another. One of the most remarkable aspects of GPT-3 is its ability to perform zero-shot or few-shot learning. This means that the model can execute tasks for which it was not explicitly trained, simply by being prompted with some examples and a description of the task. For instance, the model can generate a new text piece on a specific topic, summarize a lengthy text, or even create computer code based on a natural language description of a program. The success of large language models like GPT-3 has demonstrated AI's potential for natural language processing and opened new possibilities for the development of conversational agents, chatbots, and other NLP applications. An improved version of GPT-3, namely GPT-3.5, was used as the baseline model for the now well-known, chatbot ChatGPT™. ChatGPT™ was developed by finetuning the baseline model using Reinforcement Learning from Human Feedback, RLHF. This means that by using manual feedback from human operators the model performance was optimized towards matching the point of view of human preferences, such as diminished bias and better adherence to a set of desired rules.

[0021]Even though RLHF is a powerful tool for enabling human-like behavior in AI models and improving AI alignment, it introduces its own challenges. RLHF relies on a large number of human evaluators, is hard to scale up, and is limited in the amount and type of feedback it can capture. Moreover, acquiring high-quality feedback can be slow and hard to obtain as it is highly subjective and the consistency of the feedback varies depending on the task, the interface, and the individual preferences, motivations and biases of humans. These challenges are further compounded by the limitations imposed by the Transformer Architecture itself. Being computationally complex and resource-intensive, Transformers require significant computational power to run, posing constraints on the scalability and cost-effectiveness of the RLHF implementation.

[0022]Hence, to perform a rather complex task requiring human expert knowledge, the development of AI training or learning scenarios to train or learn a data processing algorithm enabling artificial intelligence to perform such an expert task or operation is very difficult and very time consuming because of the large training and calibration data sets involved, and sometimes hardly or even not feasible when the expert is not able to outline his/her strategy to perform the task or operation, or when the needed information cannot be explicitly produced at the scale or speed needed by the application due to inherent limitations in the communication of such information such as via button presses, for example.

[0023]Besides ANI systems, the broader and long-term goal is to create an AI for handling plural tasks in changing contexts with intelligence proportional to human general intelligence, also known as Artificial General Intelligence, AGI, or strong AI.

[0024]A key challenge in this context is the AI alignment problem, which entails ensuring that AI systems are designed to act in ways that are beneficial to humanity, aligning their objectives with human values and expectations. Solving this problem requires a profound understanding of both AI technologies and human behavior, along with the development of strategies and mechanisms to align the two effectively. Human values and expectations are another example of information that is difficult for humans to verbalize explicitly.

[0025]As will be appreciated, acquiring the necessary data, and developing training or learning schemes for training data processing algorithms enabling artificial intelligence to operate in various contexts and environments reflecting the interpretations and decision making by a human user, including those human mental strategies that may not be readily or explicitly expressible, or subjective human mental states such as error, surprise, agreement, understanding, or the like appears practically using state of the art AI training or learning techniques.

[0026]For various reasons it is also problematic and difficult to operate data processing algorithms enabling artificial intelligence handling multiple, versatile, and non-predefined tasks when working in a real-life or real-world context. That is a non-predefined or authentic context or environment, occurring in reality or practice, as opposed to an imaginary, a simulated, test or theoretical context, for example.

[0027]One problem in real-life contexts is the absence of a finite, a priori repertoire of known responses and states, and the resulting difficulty in identifying which of any number of perceived states bear specific relevance, for example.

[0028]The processing, by a data processing algorithm enabling artificial intelligence of operational data originating in relation to a real-life context or environment for performing context dependent valuations, interpretations, assertions, labelling, etc. presents a further problem, because this type of operations requires interpretation of the context or environment.

[0029]For example, paying closer attention to some aspects or features of the context than to others based on a general understanding of these aspects or features and their role in the context as a whole, or based on creative insight into the role that these aspects or features may potentially play. This is a judgement no objective sensor or data processing algorithm enabling artificial intelligence can make itself, but a human can make such an interpretation.

[0030]Training of data processing algorithms enabling artificial intelligence or machine learning systems for operating in a real-life context and environment by a human user providing active feedback reflecting its interpretations, for example, is practically not feasible because of the large training and calibration data sets involved, making such training very time consuming and demanding for a human user.

[0031]Integrating human mental data in the processing of operational data originating in relation to a real-life context or environment, i.e., rendering the system neuroadaptive, presents a further problem because ambiguities and inconsistencies in the relationship between operational data and human mental data of a human participating in the context pose a potential source of error in the processing by a data processing algorithm enabling artificial intelligence.

SUMMARY

[0032]Embodiments provided herein provide methods and systems for providing, acquiring, aligning, creating, generating, augmenting and/or modifying Artificial Intelligence, AI, involving a data processing algorithm enabling artificial intelligence operated by an information processing device, based on at least one of human cognitive and affective responses and interpretations, human expectations, human logic, reasoning, judgements, and strategies, human values and morality. A data processing algorithm enabling artificial intelligence, hereinafter also referred to as an AI-enabled data processing algorithm, for the purpose of the present disclosure, is any data processing algorithm that allows information processing devices or machines to process data replicating human intelligence.

[0033]It is noted that an AI-enabled data processing algorithm applied with the method according to the present disclosure may possess none or may already possess a certain level of artificial intelligence, for example in that the AI-enabled data processing algorithm is able to recognize objects, devices, living beings etc. that operate or participate in a context or in that the AI-enabled data processing algorithm has already been initialized according to any of the aspects outlined below.

[0034]As such, the act or process of providing, acquiring, aligning, creating, generating, augmenting and/or modifying AI for the purpose of this disclosure includes all aspects commonly associated with the training of an AI-enabled data processing algorithm, including its initialization or initial creation and its later modification, also in a continuous fashion, i.e. continuous learning.

[0035]In a first aspect of the present disclosure, there is provided a method of providing artificial intelligence from human participation with a context, in particular a real-life context, the method performed by an information processing device operating at least one data processing algorithm enabling artificial intelligence, the information processing device performing the steps of:

- simultaneously collecting or sensing operational data originating from the context and human bio-signal data and human conduct data originating from or relating to the human participation with this context.

- identifying mental processes from at least one of the bio-signal data and the human conduct data, a mental process referring to an aspect of at least one of human cognition, emotion and individual mechanisms of human information processing;

- assigning mental categories, a mental category referring to at least one of the mental processes associated with an aspect of at least one of the operational data and the human conduct data, and

- providing the artificial intelligence from applying the mental categories by the at least one data processing algorithm enabling artificial intelligence.

[0040]This aspect of the method disclosed is based on the insight that aspects of human knowledge, human intelligence, human values and morality et cetera, applied by a human participant in observing, operating or handling and completing a task or operation in a certain context or environment, can be efficiently acquired by sensing and associating human bio-signal data and human behavior or conduct data of the human participant in relation to respective operational data originating from or in that context or environment and simultaneously collected or sensed while performing the task or operation. This, in turn, relies on the fact that the human brain evaluates perceived information automatically, according to a subjective/personalized model of the world that includes human experiences, human knowledge, human innate abilities, a human value system and morality. These functions of the brain are reflected in the neuroelectric and neurochemical activity of the brain, which can be measured as bio-signals.

[0041]Bio-signals, in the context of the present disclosure, are body signals that are generated by or from human beings, and that can be continuously measured and monitored by commercially available sensors and devices, for example.

[0042]Human conduct data comprise any human expression, communication and physical activity by a human participant while observing, operating or handling and completing a task or operation in a certain context, such as gestures, body motions, facial expressions, etc. In the present method, intermediate or final decisions made and communicated through respective conduct by the human participant are also regarded as belonging to human conduct data.

[0043]Operational data may comprise physical data and/or virtual data originating from the context. The term virtual data refers to data available from a software program or software application operating in a respective context. That is, for acquiring or sensing this type of data no separate sensors, measurement equipment or other peripheral data recording equipment are required.

[0044]The method disclosed is based on the insight that activity that occurs, or specific patterns of activity that occur, in the human bio-signal data and/or human conduct data of a human participant while observing, operating or handling and completing a task or operation in a certain context or environment refers to mental processes, i.e. the internal processes that occur with the human brain, corresponding to specific aspects of human cognition, emotion or to individual mechanisms of information processing by the human participant.

[0045]The behavior or conduct of a human participating in a context not only may reveal which part of the context or environment, i.e. the applicable operational data, is perceived, attended to, or otherwise incorporated by the human while performing a task or operation, but may also be informative of mental processes such as the individual mechanisms of information processing by the human participant, as well as strategy, logic, or knowledge applied by the human. A human expert, for example, may observe just shortly or pay no or less attention to operational data that, according to his or her knowledge, are less or even not important to his or her final decision.

[0046]Mental processes may be identified or decoded using so-called classifiers or classification algorithms. Such a classifier or classification algorithm automatically orders or categorizes the bio-signal data and/or human conduct data collected or sensed by a respective sensor or sensors into one or more respective mental processes. Classifiers are commercially available or may be derived from experiments or training data, for example.

[0047]As such, a mental process may also refer to—or be identified as—the output of a classifier decoding the presence or extent of a corresponding brain activity, for example as a real number between 0 and 1. It is noted that multiple mental processes may occur at the same time.

[0048]Examples of identifiable or decodable mental processes, i.e. respective classifiers, in accordance with the present disclosure comprise cognitive load, memory encoding, memory retrieval, perception, attention, error processing, emotion recognition, surprise, reward processing, pain, pattern recognition, intention, affect, valence, and arousal, among others.

[0049]Aside from simple judgments of ‘good’ and ‘bad’, humans are capable of interpreting information in more substantial ways. Specifically, human learning and understanding are often described as categorical, assigning objects and events in the environment to separate categories. These categories are then used as an efficient, low-dimensional representation underlying further reasoning and decision-making.

[0050]In accordance with the present disclosure, mental categories are assigned or formed referring to identified or decoded mental processes in association with an aspect or aspects or features of operational data and/or an aspect or aspects or features of human behavior or conduct data simultaneously collected or sensed while performing a task or operation by a human participant in a particular context or environment.

[0051]Mental categories are formed by the information processing device automatically, from analyzing and organizing the sensed bio-signal data and/or human conduct data, and the related sensed operational data and/or human conduct data. Analyzing may involve, for example, detection of respective co-occurring bio-signal data and/or human conduct data, certain patterns occurring in these data, etc.

[0052]Hence, in accordance with the present disclosure, mental categories are assigned or formed referring to identified or decoded mental processes in association with an aspect or aspects or feature(s) and/or part(s) of operational data and/or an aspect or aspects or feature(s) and/or part(s) of human behavior or conduct data simultaneously collected or sensed while performing a task or operation by a human participant in a particular context or environment.

[0053]Respective operational data and/or conduct data are associated, either categorically or probabilistically, with at least one mental category.

[0054]The ability to recognize relevant patterns and form appropriate categories, innate to humans but often impossible to verbalize, is fundamental to how humans learn and produce intelligent behavior. In humans, such categories are created based on how the brain considers people, objects and actions et cetera are related and reflect what kind of learning may be going on in the brain, as these categories are created through experience, training, and instruction, for example.

[0055]Accordingly, by providing mental categories thus formed to the at least one AI enabled data processing algorithm, aspects of human intelligence, human values and morality reflected by the mental categories become available for either one or more of acquiring, aligning, creating, generating, augmenting and/or modifying data processing by the at least one AI enabled data processing algorithm, based on or incorporating human cognitive and affective responses, human subjective judgements, human expectations, human values and morality.

[0056]The present approach may be termed Neuroadaptive Category Learning, NCL, wherein the input from the human participant is implicitly provided by or acquired or derived from the collected bio-signal data and/or human conduct data.

[0057]NCL identifies and extracts mental categories for translating human thought processes into AI models, thereby closing the gap between human cognition and artificial intelligence. By using implicitly obtained input reflecting aspects of human intelligence and shifting our perspective from specific brain responses to a categorical view of understanding a task, neuroadaptively trained AI-enabled algorithms can outperform traditional AI learning or training methods by a significant margin.

[0058]Apart from increasing performance, NCL has a number of other advantages over traditional techniques employed to provide AI.

[0059]Identifying or decoding mental processes from the collected bio-signal data and/or the human conduct data does not rely on the active participation of the user, no additional actions are required on the part of the human to convey this information. NCL eliminates the need for pushing buttons, verbalization, or manual labeling, providing a more direct, natural and intuitive way for humans to communicate their preferences and evaluations. Overall, this saves time and resources, increases efficiency and streamlines the process, making it easier to scale up operations.

[0060]Besides, NCL may provide continuous and real-time feedback with high precision and resolution. Traditional feedback is given over large portions of data that first need to be processed and evaluated as a whole. In the case of LLMs, feedback is usually provided for the entire output (e.g., a complete paragraph or text), unable to evaluate subsections or individual components (e.g., individual words used within that text), making the process suboptimal. In contrast, NCL allows fine-grained feedback over individual aspects or features of the training data, while retaining overall output evaluation.

[0061]In other words, bio-signal and/or behavior or conduct data derived information provides for more nuanced information regarding the humans' decision-making process. In traditional AI training processes, human participants typically provide feedback after having completed the evaluation of a particular task or event and reached a conclusion about it. Intermediary decisions, judgments, and thought processes that occur throughout the performance and evaluation of operational data are ignored. As a result, traditional human input used for AI training only reflects the human participant's final assessment of the context without explicitly revealing the underlying reasoning or cognitive processes that led to their conclusion. NCL provides a more comprehensive understanding of how human participants arrive at their conclusions, enables the identification of key factors that influence decision making and facilitates alignment with human values.

[0062]Moreover, specifically in the case of NLP, for example, NCL can capture information on how individual language components relate to various mental and emotional states. This helps to identify when words and phrases are emotionally charged or when they have negative connotations, are unexpected, misused or are otherwise unwanted, significantly improving context understanding and affective and figurative speech.

[0063]Rather than developing and applying complex, laborious and time-consuming AI training or learning scenarios to train or learn an AI-enabled data processing algorithm to perform a task or operation, the present method effectively associates sensed interrelated operational, human bio-signal and human conduct data to extract or deduct a strategy or strategies and decision-making processes from observing the human user to train the AI-enabled data processing algorithm.

[0064]The present method is versatile and applicable in a variety of contexts from which operational data originate, in particular data pertaining to technological states and technological state changes of a technical device or devices operating in a respective context, and more particular a device or devices controlled by the information processing device operating the at least one AI-enabled data processing algorithm.

[0065]For the purpose of the present disclosure, technological states comprise any of but not limited to device input states, device output states, device operational states, device game states, computer aided design states, computer simulated design states, computer peripheral device states, and computer-controlled machinery states and respective state changes. A technological state change in a context is any action undertaken by a piece of technology.

[0066]The term technology collectively refers to any and all (connected) technological elements in any potential situation or context. With a technology's state being its specific current configuration, a technological state change is thus any change in configuration that the technology undergoes.

[0067]In practice, physical operational data may be sensed by any number or types of sensors such as but not limited to cameras, thermal imagers, microphones, radar, lidar, chemical composition sensors, seismometers, gyroscopes, etc., and may thus be capable of recording a context and the events taking place within it to any possible degree of objective accuracy.

[0068]For the purpose of the present disclosure, organisms or living beings may also form part of a context and the acts performed thereby and behavior observed thereof are likewise considered as operational data originating form that context.

[0069]It is noted that operational data in the light of the present method also refers to information relating to a context as such, i.e., environmental information obtained from sources not directly controlled by the information processing device, such as the presence, appearance, and behavior of non-technological or non-context connected elements, or a weather forecast, for example. As such, the present method is similarly versatile and applicable in a variety of contexts from which operational data can be obtained by technology using any number and type of sensors.

[0070]The disclosed method's versatility is further achieved based on the insight that these operational data originating from a context can be expertly, efficiently, automatically, and implicitly interpreted by human intelligence. Aspects of this intelligence, derived or obtained, processed, and provided in the manner disclosed here using human bio-signals, human conduct data and operational data, can then be provided to the AI-enabled algorithm, allowing it to obtain meaningful inputs and function in contexts that would otherwise be too complex and/or too unstructured, specifically real-life contexts.

[0071]The term bio-signals refers to both electrical and non-electrical time-varying signals comprising any of human body bio-signals and measurements of human physiological structure and function, monitored or sensed by a number of commercially available sensors, for example, and operatively connected with the human participant, i.e. worn by or aimed at the human participant, including but not limited to at least one of direct and indirect measurements of electro-cardiac activity, body temperature, eye movements, pupillometric, hemodynamic, electromyographic, electrodermal, oculomotor, respiratory, salivary, gastrointestinal, genital activity and brain activity. Brain waves and other measures of brain activity are also bio-signals for the purpose of the present method.

[0072]The term indirect measurements here also refer to derivate measures of bio-signals, including physiological parameters such as heart rate variability, gaze, peak amplitudes, power in specific frequency bands, and signal rise and recovery times, for example.

[0073]Human conduct data may be monitored or sensed by a number of commercially available sensors, for example, operatively connected with the human participant, i.e. worn by or aimed at the human participant, including but not limited to input modalities comprising a keyboard, push buttons, switches, touch screen, mouse, joystick, electronic pencil/stylus, laser pointer, motion controller, game controller, microphones, cameras, thermal imagers, motion capture devices, pressure sensors, gyroscopes or other equipment for signaling a selection or decision for example.

[0074]For the purpose of the present disclosure, the term ‘simultaneously collected or sensed’ with respect to the operational data, human bio-signal data and human conduct data points out that these data are related with respect to their occurrence in time while a human participant is performing a task or operation or is otherwise involved with a context or environment. The respective data may be available in real-time or quasi real-time, i.e. having a close approximation to real-time data, due to an initial processing or measurement or sensing delay, for example.

[0075]It will be appreciated that in accordance with the present disclosure mental processes and mental categories may be identified or assigned in an automated manner in real-time or quasi real-time, and likewise the behavior of the AI enabled data processing algorithm.

[0076]The automatic formation of mental categories may comprise multiple iterations of, for example, selecting, categorizing, clustering, projecting, partitioning, and transforming the categories and their constituent elements based on features and patterns in the operational data, human bio-signal data, and human conduct data.

[0077]Non-limiting examples of contexts or fields at which the present method can be applied for already solving existing problems or extend the AI capabilities of given systems are human-computer interaction, human-machine systems, human-robotic interaction, robotics, assistive technologies, medical technology, treatment/curing of health conditions, cyber security and cyber technology, as well as in the sectors of law enforcement and interrogation, border security systems at airports, mind control, psychological modification and weapon systems, or combinations of such fields.

[0078]In an embodiment of the present disclosure, the information processing device further performing the steps of:

- identifying mental states from simultaneous mental processes, a mental state referring to a condition of at least one of human cognition, emotion and individual mechanisms of human information processing;

- constructing a multi-dimensional mental state data space, wherein a respective mental process forms a dimension of such multi-dimensional mental state data space, and

- assigning mental categories in the multi-dimensional mental state data space, a mental category referring to a particular subspace of the multi-dimensional mental state data space associated with aspects or features of at least one of the operational data and the conduct data.

[0082]To gain a deeper understanding of an individual's cognition, it is beneficial to look at more than just isolated mental processes. In the context of this disclosure, a mental state refers to a higher-order condition or status of cognition or emotion, incorporating and combining multiple individual simultaneous mental processes. Different mental states correspond to different combinations of mental processes. For example, specific aspects of memory, attention, perception, et cetera, i.e., mental processes, may be combined to form identifiable interpretations, thoughts, attitudes, or feelings.

[0083]As such, different mental processes interact and influence each other to produce an overall mental state, which can be understood as a combination of different mental processes operating in parallel. Hence, in accordance with the present disclosure, a Multi-Dimensional Mental State, MDMS, data space is constructed, spanned by a combination of multiple simultaneous mental processes.

[0084]Any point in the MDMS space corresponds to a specific mental state, as defined by the specific extent of the activity of the underlying mental processes or the specific output of the corresponding classifiers. Conversely, any specific combination of simultaneous mental process activity can be given a specific data point within the MDMS space.

[0085]Everything a human perceives, interprets, thinks, or otherwise processes using his/her intelligence elicits brain activity. Therefore, to the extent that this brain activity is captured by the individual mental processes/classifiers contributing to an MDMS space, everything a human perceives, etc., can be assigned to a point in that space.

[0086]However, the same thing perceived by a different person can end up on a different point in the same MDMS space. For example, a red teacup may end up on different MDMS locations depending on whether the human observer prefers tea or coffee, prefers red or white, prefers cups or glasses, has seen that exact teacup before or not, has ever seen any teacup before or not, has a bad memory of once breaking someone's beloved teacup or not, et cetera.

[0087]At the same time, all things such as the above-mentioned teacup sharing a particular subjective quality, e.g. where one person has a bad memory of once breaking some's beloved tea cup, may end up in the same location in the MDMS, or at least may end up in the same location in a subspace of the MDMS. As such, the point at which a given piece of perceived context data lands in an MDMS space will likely vary between persons, but will be relatively consistent within persons, reflecting their subjective interpretations.

[0088]The focus on the perception of individual objects served merely as an example. Because all human intelligence, including cognition, knowledge, expertise, values, and morality is a function of brain activity, individual mental processes and their combination into mental states can reveal relevant aspects of this intelligence.

[0089]Mental categories are now assigned or formed by identifying which subspaces of the MDMS data space are consistently associated with which aspects or features and/or part of the operational and/or conduct data simultaneously collected with the bio-signal data to reflect these aspects of intelligence. These associations can be of a statistical, probabilistic, logical, or categorial nature, for example.

[0090]For example, with access to human bio-signal data numerically representing various specific mental states constituting a mental state space, a mental category may be formed that comprises all operational data that relate to a specific location or set of locations in the mental state space covered by the bio-signal data.

[0091]This means a mental category reflects the consistency that exists between and/or within persons of how aspects or features and/or parts of operational and/or conduct data lead to different mental states, and hence support to provide the element of human intelligence to the AI enabled data processing algorithm.

[0092]For example, when a teacup, a kettle, a teaspoon, East Asia, Boston, Jean-Luc Picard, and a sugar cube all land on the same subspace of the MDMS, this reveals a consistency that binds them together in that particular person's interpretation. This something, this common denominator of the operational and/or conduct data that consistently leads to the same mental state, provides a particular aspect of human intelligence, in this case meaning (e.g. “tea-related”). The subspace encompassing this mental state associated with the operational and/or conduct data is the mental category.

[0093]Finding this person-specific common denominator from the operational and/or conduct data and identifying patterns in the elicited mental states to delimit specific subspaces in the MDMS space, in real-time or quasi real-time, is a complex process that requires automated data processing and lies beyond human manual capabilities.

[0094]That is, once established, providing mental categories to an AI enabled data processing algorithm along with operational data provides a layer of human intelligence or meaning based on subjective human interpretation the AI enabled data processing algorithm would not otherwise have access to, improving the learning performance of the AI enabled data processing algorithm.

[0095]The MDMS may be defined, for example, as a single numerical vector, where maximally complementary, i.e., ideally orthogonal, mental processes serve as individual dimensions in a multi-dimensional space. Each dimension of the MDMS is derived from the output of a classifier assessing one mental process, for example.

[0096]In accordance with the present disclosure, a multi-dimensional mental state data space may be constructed comprising psychological, cognitive, affective, neurophysiological, and otherwise human mind-related states, including but not limited to aspects of at least one of reasoning, problem solving, planning, abstract thought, concluding, interpreting, thinking, prediction, reflection, creativity, imagination, strategy, logic, moral judgement, empathy, agreement, confusion, understanding, comprehension, engagement, and satisfaction.

[0097]As explained above, for the purposes of the present disclosure and claims, the identification of mental processes and mental states, the construction of an MDMS data space, and the assignment of mental categories may all be based on an automated analysis of collected bio-signal data and/or conduct data using appropriate classifiers or classification algorithms and performed in real-time or quasi-real time.

[0098]The automatic formation of mental categories may comprise multiple iterations of, for example, selecting, categorizing, clustering, projecting, and transforming the categories and their constituent elements based on features and patterns in the operational data, human bio-signal data, and human conduct data.

[0099]As will be appreciated, the present disclosure may also be applied on a selection of the related operational data, human bio-signal data and human conduct data by the information processing device, and such a selection may be based on at least one of the sensed operational data, human bio-signal data and human conduct data.

[0100]This may provide a reduction in the amount of data used for the training or learning of an AI-enabled data processing algorithm. Selection based on operational data allows, for example, objects with specific features to be included in further processing. Selection based on bio-signal data allows parts of the operational data to be identified that were associated with specific features in related bio-signal data, specific mental processes, specific mental states, or specific mental categories.

[0101]As such, a selection based on human bio-signal data in particular allows data to be selected that could not have been identified on the basis of operational data or other overtly observable data, for example only selecting sensed operational data that relate to a mental surprise state or arousal state of a human participant, or to a previously learned mental category specific to that individual.

[0102]Selection based on human conduct data allows the AI-enabled data processing algorithm to, for example, only consider operational data related to specific human actions. Combinations of these selection approaches give rise to a variety of selection and data reduction procedures available to the AI-enabled data processing algorithm or the human configurator thereof.

[0103]This can be advantageous, for example, when not all available data bears relevance to, or otherwise carries information pertaining to, the task the AI is learning to solve, thus providing a data filtering, selection, or reduction technique. Similarly, it can be advantageous, for example, while updating an already acquired AI or in case of limited processing power and/or limited data memory available to the data processing device, such as in mobile equipment, for example.

[0104]In an embodiment of the present disclosure, the step of assigning mental categories comprises at least one of:

- assigning at least one predetermined mental category;

- assigning at least one mental category based on at least one predetermined mental process;

- assigning at least one mental category based on at least one of selected human conduct data and selected operational data, and

- assigning at least one mental category determined from a different context.

[0109]This embodiment reflects the ability to include a priori knowledge. Generally, mental categories can be assigned based on data obtained from the ability of humans to recognize features from people, objects, things, concepts, actions, et cetera, and associate these with previously learned similar features, leading to the formation or refinement of categories.

[0110]Conversely, pre-existing or previously formed mental categories can be used to infer features from previously unexperienced objects, et cetera. Specifically, hierarchically inferior, more specific mental categories can inherit features and properties of hierarchically superior, more general mental categories. These mental categories thus represent logical units used internally by humans in the process of reasoning, perceiving, thinking, and decision making, for example. In the present disclosure, the operational data may be organized based on at least one predetermined mental category. For example, one or more mental categories related to known mental states of the human participant may be predetermined to form one or more mental categories. Providing such a predetermined mental category may, for example, guide the AI-enabled data processing algorithm to utilize a specific representation of the operational data possibly with associated predetermined logic, or to ignore particular operational data, for example, and may significantly reduce processing time by the information processing device.

[0111]Mental categories may be predetermined based on at least one predetermined mental process, e.g., a mental category related to “high arousal” or “positive valence”, at least one mental state, e.g. a mental category related to “high satisfaction” or “moral disagreement”, and/or based on aspects or features and/or parts of human conduct and operational data.

[0112]For the development of an AI-enabled algorithm specifically able to distinguish morally right from morally wrong decisions, it can be beneficial to predetermine MDMS subspaces and/or mental categories known to be related to human moral judgement, for example. Similarly, for AI-enabled algorithms specifically able to distinguish different types of objects, for example, some relevant objects may be associated in a predetermined manner to at least one mental category.

[0113]Furthermore, a mental category can be predetermined based on mental categories assigned in a different context, from a different participant, and/or from different data.

[0114]This embodiment is also advantageous for guiding the training of the AI-enabled data processing algorithm and reducing processing time by the information processing device, in particular when it is known beforehand that certain bio-signal data and/or human conduct data are representative for the human participation in a certain context.

[0115]In the human brain, categories may be sorted based on logical relationships, such as temporal relationships, which means that the brain recognizes that they tend to—or tend not to —pop up near one another at specific times, for example. A series of experiences that usually occur together, i.e. that are temporally related or interrelated, form an event until a non-temporally related experience occurs and marks the start of a new event. It has been found that the brain breaks experiences into events or related groups that help to mentally organize situations, using subconscious mental categories it creates.

[0116]Hence, in an embodiment of the present disclosure, artificial intelligence is provided by the information processing device based on a collection of mental categories, wherein a collection of mental categories is at least one of a set of operational data associated with mental categories and a set of collections of mental categories.

[0117]In this manner events and other logically connected parts of operational data can be formed, detected, represented and used by the artificial intelligence of an AI-enabled data processing algorithm.

[0118]A partial or complete rank or hierarchy may be provided to mental categories, for example based on patterns observed in at least one of bio-signal data and operational data reflecting the rank, generality, specificity, selectivity, similarity, dissimilarity, overlap, or separation of mental categories. A partial or complete rank or hierarchy may furthermore be imposed on mental categories, for example based on either one or both of predetermined bio-signal data and predetermined human conduct data. Mentals states having a relative higher rank or hierarchical position may give rise to a higher relevance or a higher priority or immediacy of an action or operation by the AI-enabled data processing algorithm, for example, or may be used to transfer mental categories between contexts.

[0119]The term ‘collection of mental categories’ in the light of the present disclosure not only refers to a temporal relationship between mental categories, or between or within collections of mental categories, but also to logic relationships, including informal logic, formal logic, symbolic logic and mathematical logic, cause and effect relationships, hierarchical relationships, et cetera, all contributing to the knowledge of a human participant in performing a task or operation.

[0120]For example, when a person inspects two tea sets and chooses one, it is not clear why the one was chosen. The mental category associated with the person's perception of each set as a whole may have been the same. But two collections of mental categories corresponding to the inspection of each piece of each set, could reveal: tea, tea, coffee, tea for one set, and tea, tea, tea, tea for the other. The human reasoning may thus have been: the first set has one piece that does not seem fit for tea.

[0121]In an embodiment of the present disclosure, the artificial intelligence is acquired based on a collection of mental categories and corresponding human conduct data. By associating the way a human participant conducts or behaves in connection with a collection of mental categories, i.e. an event, information can be deduced about the relevance, meaning, importance and/or demarcation of a particular event in performing a task, operation or observation, specifically with respect to the human's subjective interpretation of such event.

[0122]Some forms of prior art supervised AI use labels to learn to identify, localize, differentiate between, or recommend different kinds of objects or events. This requires that humans manually provide these labels by explicitly generating descriptors for concrete pieces of data such as images, videos, text, or audio, for example.

[0123]In accordance with an embodiment of the present disclosure, wherein the at least one data processing algorithm enabling artificial intelligence comprises labels, the step of providing artificial intelligence comprises enhancing at least one of the labels based on at least one mental category.

[0124]With the present method, based on the mental categories disclosed above, such labelling is supported and performed faster for some types of descriptors, and may even provide descriptors that may not be possible to generate in any other way. The term enhancing may include creating, refinement, qualification, augmenting, ranking, et cetera of labels or descriptors used by an AI-enabled data processing algorithm.

[0125]As mentioned in the Background part above, human experts may not always be able to explicitly indicate how they perform the task or operation and on the basis of which contextual parameters or variables and their interpretation and evaluation the task or operation is performed, i.e. how a decision or result is reached. With the present method, training of the AI-enabled data processing algorithm is not limited those data that can be consciously, explicitly generated by the human.

[0126]In an embodiment of the present disclosure, operational data are provoked by the information processing device.

[0127]For example, in case the AI-enabled data processing algorithm misses information to complete a task or process at hand or is otherwise not able to process data with sufficient quality and reliability, for example, aspects of the context may be momentarily adapted by the information processing device to provoke operational data to retrieve the required or missing information. That is, an aspect or aspects of the context may be adapted by introducing, changing, or deleting information, processes, tasks, events et cetera, or by otherwise initiating a technological state change to invoke a response of the participating human, either consciously or subconsciously.

[0128]This embodiment of the present disclosure in part relies on the insight that any such provoked operational data may be perceived, attended to, or otherwise incorporated by the human, and may thus automatically provoke related human bio-signal data and/or human conduct data. Such adaptation may involve any of the momentary actions or processes handled, a momentary technological state of devices operating in the context, but also adaptations to provoke virtual operational data.

[0129]In a further embodiment of the present disclosure, operational data are provoked by the information processing device to evoke at least one mental category or at least one collection of mental categories.

[0130]That is, the evocation of operational data serves a specific purpose identified by any of the algorithms operated on the information processing device, for example the purpose to invoke a particular mental response of the participating human, for example to complete, to enhance, to investigate, to test and/or to delete a particular collection of mental categories.

[0131]As another example, operational data may be provoked to aid the learning of the AI-enabled data processing algorithm, for example when it is identified that additional data may help to update, optimize, or otherwise fulfil specific criteria of any of its internal parameters or representations.

[0132]In this way, like in humans, knowledge, proclivities, preferences, and moral values can be built up interactively by the AI-enabled data processing algorithm, even on a trial-and-error basis, both during the training of the algorithm and while performing operations in contexts to improve itself by means of interactive learning, continuous learning, and cognitive/affective probing, i.e. during the deployment of the algorithm in contexts.

[0133]Hence, among others, the AI-enabled data processing algorithm may learn to incorporate moral values, problem solving strategies, preferences, associations, distributions of degrees of acceptance, etc. such that a convergence of human and machine intelligence is initiated and continuously pursued. Over time the AI-enabled data processing algorithm may learn about the human's subjective interpretations in a bigger scale, building up a profile/model of that human's subjective interpretations within single or across multiple contexts.

[0134]By iteratively learning, provoking data, and learning from the provoked data, the AI-enabled data processing algorithm not only processes more data to become more intelligent but also, because of the provocation of specific mental categories and collections of mental categories from the human, learns to mimic the human's preferences, behaviors, interpretations, norms, and values given a respective context, thus becoming more alike in its own interpretations and actions to the human it learned from. The more data that become available from a person over time and in different contexts, the more history can build up a profile/model of that human's interpretations, knowledge, et cetera, which can be referred to as a cognitive copy.

[0135]The present method is practically applicable in various scenarios or contexts where humans interact with machines, personal computers, robots, avatars, and many other technical applications. Which type or types of bio-signal sensor is or are to be used, and/or how the sensing of human conduct data is to be performed, may be selected based on a particular context and/or a specific human participation, for example.

[0136]In an embodiment of the present disclosure, human brain activity data are processed by at least one Brain-Computer Interface, BCI, in particular at least one passive Brain-Computer Interface, pBCI, operating at least one classifier responsive to implicit human brain activity indicative of at least one mental process.

[0137]A BCI and a pBCI are tools to assess information about brain activity of an individual. A BCI, i.e., an active or reactive BCI, is built on brain activity that is generated or modulated, directly or indirectly, by a user with the intention to transfer specific control signals to a computer system, thereby replacing other means of input such as a keyboard or computer mouse.

[0138]A pBCI differs significantly from a BCI in that pBCI data are based on implicit or passive, involuntary, unintentional, or subconscious human participation with a context, different from explicit or active, i.e., conscious, voluntary, intentional, human interaction with the context. Instead of explicitly generated or modulated brain activity, a pBCI is designed to be responsive to naturally-occurring mental processes that were not intended for communication or control, but that can nonetheless be detected, decoded, and used as input to technology.

[0139]A pBCI distinguishes between different cognitive or affective aspects of a human user state, typically recorded through an electroencephalogram, EEG. An immediate neurophysiological activity of the human user in a context may be associated to the current mental state or specific aims of a user, by a respective classifier or classifiers operated by the pBCI. For the purpose of the present disclosure, multiple pBCIs each operating a different classifier directed to sense different brain activity associated with different specific mental processes of a human participant may be used. In practice, tens or hundreds of classifiers may be deployed.

[0140]The method presented is not limited to the processing of operational data, human bio-signal data and human conduct data of a single human individual participating in a context, but may also be practiced for the processing of operational data, human bio-signal data and human conduct data sensed of two or more, i.e., a group of individuals participating in a respective context. In the case of driving a car, for example, artificial intelligence by the AI-enabled data processing algorithm may be acquired from both the driver of the car and a passenger or passengers.

[0141]That is, the artificial intelligence acquired, aligned, created, generated, augmented and/or modified by an AI-enabled data processing algorithm in accordance with the present disclosure may be based on the intelligence, judgement, knowledge and skills of a plurality of persons. By processing data sensed of multiple persons involved, training of an AI-enabled data processing algorithm can be significantly speeded up compared to training by a single user or the training can make use of a group consensus or a majority vote rather than individual judgements, thus making the artificial intelligence more reliable, more robust or more general, for example.

[0142]Likewise, in operation, differences in the evaluation and perception of operations and interactions among individuals of a group participating in a common context, such as differences in the mental categories and collections of mental categories among the individuals of a group, may reveal additional information for adapting the AI-enabled data processing algorithm more quickly compared to an individual user, for example.

[0143]Human bio-signal data and human conduct data may be sensed from each individual of a group separately, while operational data originating from the context may be sensed for the group as a whole, for a sub-group or for each individual separately. This, dependent on a particular context, as will be appreciated.

[0144]Note that in the case of several human individuals participating in a context these humans need not necessarily be located at a same geographic location. In such a case, some or all or a group of human individuals may participate in the context in that same is replicated or is otherwise partly or completely virtually made available to respective human individuals.

[0145]The method according to the present disclosure is excellently applicable for real-time processing operational data originating from a context, in particular a real-life context, performed by an information processing device operating at least one data processing algorithm enabling artificial intelligence processing applying mental categories, in particular mental categories mapped at a multi-dimensional mental state data space, as disclosed above, in particular for the processing of data pertaining to a time-critical context.

[0146]Because the assessed human bio-signal data and human conduct data can not only be interpreted in binary form, such as correct or wrong, accept or reject, expected or unexpected, et cetera, but also continuously, i.e. any number between 0 and 1, or even minus infinity and infinity, for example, indicating a degree of subjective perception, reaction, or interpretation related to the perceived contextual event, the method presently presented may be used to support a wide variety of AI-enabled data processing algorithms for handling plural tasks in contexts with intelligence proportional to human general intelligence.

[0147]Hence, the at least one AI-enabled data processing algorithm operated for the purpose of the present disclosure may be any suitable data processing algorithm known in practice, such as but not limited to data processing algorithms based on (deep) reinforcement learning paradigms such as Q learning or Policy Gradient learning, or any supervised learning approach such as Support Vector Machines, Linear Discriminant Analysis, Artificial Neural Networks backpropagation learning, or unsupervised learning based of clustering or principal component analysis or other probabilistic methods, Transformer architectures, et cetera. The present method provides a tool to automatically provide artificial intelligence by assessing a human's interpretation of a perceived event in a given context, in real-time or quasi real-time after the occurrence of that event. This tool provides for both continuous and event-related monitoring of the mental processes of the human and allows an automated view in the subjective, situational observation and interpretation of a person and allows to make this information available for further processing, such as to transfer key aspects of the cognition and mindset of a human into a machine.

[0148]Building up on this, it is found that through interactive learning the artificial intelligence can home in on and converge to particular aspects of the human mindset, reflecting this person's strategies, interpretations, preferences, intelligence and moral values, for example. And the more data that become available from a person over time and in different contexts, the more history can be built-up, and a better match to that one person's intuitive intelligence is established, building up a profile/model of that human's subjective interpretations, that can be referred to as a cognitive copy.

[0149]The previously mentioned ability of the AI-enabled algorithm to provoke data is particularly useful for this purpose of homing in on and converging to specific aspects. This may furthermore make use of an additional ability to repeat certain steps until specific criteria are met.

[0150]In accordance with an embodiment of the method according to the present disclosure, the information processing device is arranged for repeating the steps referring to identifying mental processes, assigning mental categories, and providing the artificial intelligence each time based on a differing selection of the collected data, until a result of human participation with the context and a result of operating with the context by the at least one data processing algorithm enabling artificial intelligence applying the mental categories match within predefined criteria. An embodiment of the present data processing method, implemented in an information processing device operating an AI-enabled data processing algorithm, comprises the steps of:

- collecting, by the information processing device, simultaneously sensed operational data originating from a context and human bio-signal data and human conduct data relating to human participation with this context;

- selecting, by the information processing device, based on at least one of the human bio-signal data and human conduct data, related operational data;

- assigning, by the information processing device, based on at least one of the human bio-signal data and human conduct data, a plurality of mental categories, a mental category comprising part of the selected operational data associated with at least one of the human bio-signal data and the human conduct data corresponding to that mental category;

- forming, by the information processing device, a collection of mental categories, wherein a collection of mental categories is at least one of a set of operational data associated with mental categories and a set of collections of mental categories;

- comparing, by the information processing device, a result of participating with the context by the human with a result of operating with the context by the at least one data processing algorithm enabling artificial intelligence based on the collection of mental categories formed;

- repeating, by the information processing device, the steps of assigning, forming and comparing until compared results match within predefined criteria, and

- providing, by the information processing device, based on matching results, artificial intelligence enabling the at least one data processing algorithm to process operational data originating from a context representing human participation with the context.

[0158]Those skilled in the art will appreciate that the information processing device may operate multiple data processing algorithms, such as but not limited to a data processing algorithm for performing the steps of collecting, selecting, assigning and forming, and another separate data processing algorithm for performing the steps of comparing and providing artificial intelligence.

[0159]The assigning may comprise several intermediate steps like sorting, grouping, and performing a mental assessment of the data, that is identifying or decoding mental processes, identifying or decoding mental states from multiple simultaneous mental processes, and constructing an MDMS data space, for example, in accordance with the present disclosure.

[0160]Besides a single information processing device, parts of the processing of data according to the present method may be performed by multiple cooperating information processing devices, including so-called virtual machines, and information processing devices located at different geographic locations, which processing is deemed to be covered by the scope of the Claims.

[0161]Following the method presented, in case the information processing device operates multiple algorithms as indicated above, all such algorithms may be AI-enabled data processing algorithms.

[0162]In a second aspect, the present disclosure comprises a data processing algorithm comprising artificial intelligence provided in accordance with the data processing method disclosed in conjunction with the first aspect above. That is, a trained AI-enabled data processing algorithm.

[0163]In a third aspect, the present disclosure provides a program product, comprising instructions stored on any of a transitory and a non-transitory medium readable by an information processing device, which instructions arranged to perform the method according to any of the embodiments disclosed above when these instructions are executed by an information processing device operating at least one AI enabled data processing algorithm, including any of a computer and a computer application.

[0164]It is a further object of the present disclosure to deploy a data processing algorithm comprising artificial intelligence obtained by the data processing method disclosed in accordance with any of the first, second and third aspects above.

[0165]Accordingly, a fourth aspect of the present disclosure relates to a method of real-time processing operational data originating from a context, in particular a real-life context, the method performed by an information processing device operating a data processing algorithm comprising artificial intelligence provided in accordance with any of the embodiments disclosed above.

[0166]This fourth aspect of the present disclosure relates to the actual use of a trained or learned AI-enabled data processing algorithm, by processing sensed operational data only and not requiring human bio-signal and human conduct data, while performing or handling a task, an operation or observation in a context based on the knowledge and skills of a human expert reflected in the artificial intelligence provided by the AI-enabled data processing algorithm. That is, the operational data are processed representing human participation with the context.

[0167]Thus, in this aspect of the present disclosure, no human bio-signal data and/or human conduct data are required, although same may still be available, while the mental categories and collections of mental categories that have previously been formed continue to be used by the AI-enabled data processing algorithm, for example to provide internal representations of the operational data.

[0168]The artificial intelligence already provided by the AI-enabled data processing algorithm may, in accordance with a further aspect of the present disclosure, be modified by the information processing device based on operational data originating from the context.

[0169]For example, when operational data from the context indicate an error, conflict or other controversy in the performance of an operation or task, et cetera, by the information processing device operating the trained AI-enabled data processing algorithm, the artificial intelligence of the AI-enabled data processing algorithm may be corrected, updated, enhanced, or otherwise modified by the information processing device.

[0170]In a fifth aspect the present disclosure provides a data processing system, comprising means arranged for performing the data processing method disclosed in conjunction with the first, second and third aspect above. In general such a processing system includes at least one information processing device arranged for operating at least one AI-enabled data processing algorithm, equipment in data communication with the information processing device for sensing operational data originating from a context, human bio-signal data and human conduct data of a human participating in that context.

[0171]The present disclosure provides a mechanism that assesses, correlates, outputs, or provides other products of human objective, subjective and intuitive intelligence, directly and automatically, optionally making use of but not explicitly requiring actions from a human participating in a given context.

[0172]Using the methods outlined above, this allows aspects of human intelligence, such as used strategies, skills, categories, and logical reasoning, for example, to be learned by or otherwise transferred to an AI-enabled data processing algorithm—such as in the form of labels, weights, connections, neural network structure, model topology, functions, representations, meta parameters, decision trees, or descriptors—which can then reproduce such aspects autonomously, without requiring the participation of the human.

[0173]The use or involvement of bio-signals not only allows subconscious, intuitive, or otherwise automatic aspects of intelligence to be revealed and included, it also significantly improves ease of use and comfort to participate in an AI training context by a human user, but also avoids cognitive overload or any distraction from the ongoing context by possible complex to understand AI training or learning scenarios and instructions, for example.

[0174]The data input to the AI-enabled data processing algorithm is neither limited those data that can be consciously, explicitly generated by the human. The method presented may significantly speed up training and operation of an AI-enabled data processing algorithm compared to the training of AI-enabled data processing algorithms requiring explicit user actions, for example.

[0175]In a sixth aspect the present disclosure sees to using mental categories for providing artificial intelligence by an information processing device operating at least one data processing algorithm enabling artificial intelligence.

[0176]An embodiment of the present method, system, and program product results in what is called a Situationally Aware Mental Assessment for Neuroadaptive Artificial Intelligence, SAMANAI, tool that by which an AI-enabled data processing algorithm may provide artificial intelligence from a human brain directly in contexts, environments or scenarios wherein the human participates, which contexts may comprise multiple sources of information, processes, tasks, events, etc.

[0177]This SAMANAI tool can be applied for increasing the learning rate of an AI-enabled data processing algorithm by integrating preprocessed evaluation of the human mind, with the effect that the learning will be quicker and less erroneous than using human input through AI training or learning scenarios and instructions button presses.

[0178]The SAMANAI tool can be applied to personalize any AI-enabled data processing algorithm, either a strong or weak AI, by assessing subjective and/or moral human values used in a given context environment and creating mental categories to be incorporated into the AI-enabled data processing algorithm, for future decision making in solving or completing a task, operation, or any type of handling based on these human values.

[0179]The SAMANAI tool enables context dependent valuations, interpretations, assertions, labelling, etc. by the AI-enabled data processing algorithm of operational data in contexts or environments, handling multiple, versatile, and non-predefined tasks.

[0180]As an untrained AI-enabled data processing algorithm is incapable of evaluating the world on its own, by definition, SAMANAI provides information to the learning process that would not be available otherwise.

[0181]The above-mentioned and other aspects of the present disclosure are further illustrated in detail by means of the figures of the enclosed drawings.

BRIEF DESCRIPTION OF THE FIGURES

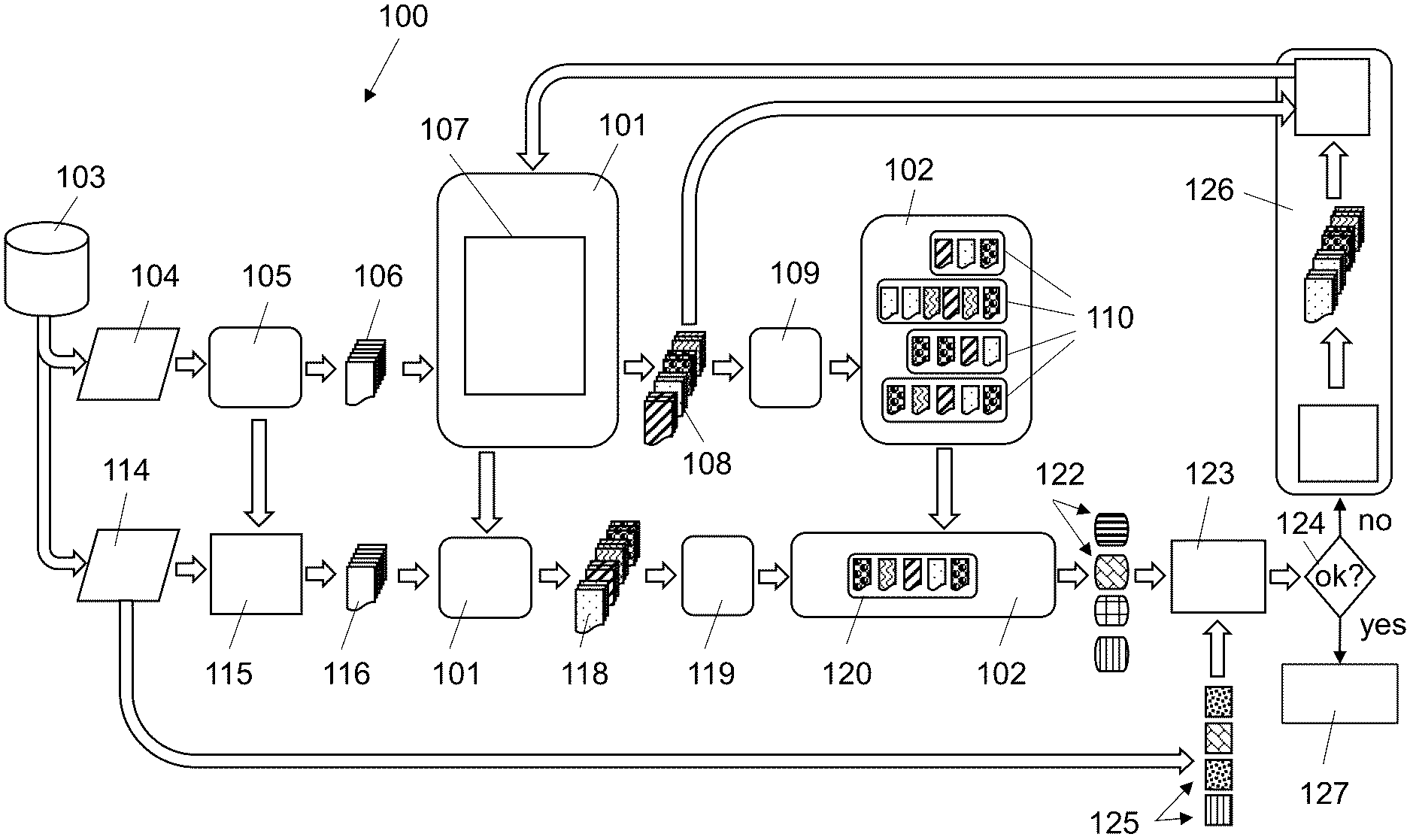

[0182]FIG. 1 illustrates an example, schematically, of a general set-up for practicing the present disclosure, both for training purposes and deployment in a simulated or real-life operational application.

[0183]FIG. 2 shows an example electrode placing of a passive brain-computer interface for registering brain activity signals based on electroencephalography, which can be used for operating the method according to the present disclosure.

[0184]FIGS. 3-7 illustrate, schematically, an example based on the method according to the present disclosure.

[0185]FIG. 8 shows, in a graphical illustration, an example comparison of the performance of the method according to the present disclosure compared to prior art AI training methods, for the example illustrated of FIGS. 3-7.

[0186]FIG. 9 illustrates, schematically, in a process type diagram, example steps of an embodiment of the method according to the present disclosure.

DETAILED DESCRIPTION